AI scams explained: Common tactics and how to stay safe

Artificial intelligence (AI) scams are an evolution of traditional scams that use AI to target greater numbers of people and improve their overall efficiency. These scams work by generating realistic-sounding messages faster than any human could and often responding in real time to victims.

While traditional cons relied on mass emails, often riddled with spelling and grammar errors, AI tools can create targeted emails that sound professional and legitimate. They can also create voice clones, images, and videos that are increasingly indistinguishable from the real thing.

This article will describe the most common AI scams, how to spot the signs of artificially generated content, and how to protect yourself from falling victim to one of these attacks.

What are AI scams?

AI scams are simply scams that harness the power of AI tools to increase their effectiveness. Scammers can now use generative AI to create highly personalized, realistic scams that are harder than ever to identify.

Despite the use of new technology, however, these scams still operate with the same basic goal: deceiving people into sending money or sharing their sensitive personal information.

How scammers use artificial intelligence

Scammers use AI to scale up the size of their scam, build trust with targets, and create materials like images and videos to increase the scam’s credibility.

Automation and personalization at scale

Before AI, scammers relied on generic emails or messages sent to large audiences, with only limited personalization. Today, AI chatbots can hold simultaneous conversations with dozens or even hundreds of targets, responding in ways that are nearly indistinguishable from a real human.

Combined with AI’s ability to tailor messages based on publicly available or breached data, this allows criminals to carry out highly targeted attacks at a scale and with a level of realism that was previously impossible.

Social engineering enhanced by AI

Social engineering happens when scammers use deception or persuasion tactics to trick a user into taking an action. Traditionally, social engineering attacks were either highly targeted and hyper-personalized or very broad (mass phishing attempts sent to many thousands of people, for example).

AI tools have drastically increased the effectiveness of social engineering attacks. Modern tools can detect tone; for example, if someone hesitates when asked for assistance or money, AI can shift to a more reassuring approach. If someone asks a technical question, AI is typically better able to answer than a scammer. These convincing two-way conversations can encourage victims to comply.

Impersonation of trusted individuals or brands

AI makes it easier for scammers to impersonate people or organizations convincingly. Voice cloning can replicate a friend, family member, or authority figure using just a few seconds of audio, while AI-generated text can mimic writing styles in emails or messages.

Criminals can also produce realistic logos, websites, or social media profiles to pose as legitimate companies. These tools increase the believability of scams and make it harder to spot deception at a glance.

Why AI scams are harder to detect than traditional scams

The average traditional scam is relatively easy to spot. Red flags include poor grammar, spelling errors, and other inconsistencies, all introduced through human error. However, AI doesn’t make these same mistakes. In general, content generated by AI is well-structured, uses proper grammar, and can be personally tailored to individual targets.

Common types of AI scams

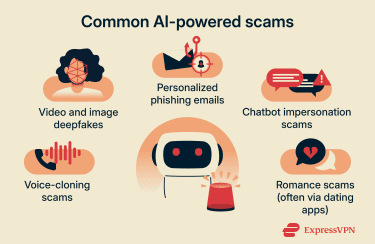

AI has given rise to a variety of novel scams; here are a few of the most prominent types.

AI voice cloning scams

One of the most high-profile AI-powered scams is voice cloning. AI can quickly generate recordings that convincingly mimic a loved one or an authority figure, and criminals use these clones to manipulate victims emotionally and financially.

For example, in 2025, a Florida woman lost $15,000 after a scammer called her with a fake recording of her daughter. In the recording, the daughter claimed to have been involved in a car accident, and then an “attorney” came on the line and said the money was needed to post the daughter’s bail.

Voice-cloning scammers also target corporate environments, aiming at executives, financial staff, or IT personnel who can grant access to sensitive systems or funds. For example, scammers may use AI-generated voices to imitate an IT help desk employee, asking for login credentials or “verification” details, or pose as an employee at a victim’s bank to bypass security checks.

AI tools make all of these impersonations more believable by replicating not just a voice but the tone and conversational style of the impersonated individual or role.

Deepfake video and image scams

Deepfake images and videos have reached a level of realism today that makes them difficult to distinguish from authentic content. While early deepfakes often showed obvious flaws or unnatural movement, modern AI-generated media can convincingly replicate real people and environments.

Criminals increasingly use deepfakes for fraud and extortion. In particular, deepfake video calls have been used to impersonate executives and colleagues. In a widely reported case in Hong Kong, a finance employee was tricked into transferring millions of dollars after participating in a video call featuring deepfake versions of company executives.

In consumer-facing scams, deepfake videos of celebrities and influencers have been widely used to promote fake cryptocurrency schemes and fraudulent giveaways. Deepfakes are also used in extortion campaigns, where attackers create compromising images or videos of their victims and threaten to release them unless a ransom is paid.

Overall, as AI video and image quality continues to improve, visual media can no longer be treated as proof of authenticity.

AI-generated phishing emails

Phishing emails have always been a popular scam tactic, as they’re low-cost and easy to execute. These emails were often poorly written, but now AI can create cleaner, more persuasive text that’s much harder to spot.

However, the impact of AI goes beyond just improving spelling and grammar. AI can use data scraped from public sources to tailor the emails to the target. This could include names, recent purchases, or workplace context. AI can also adapt language to suit regional spelling or industry‑specific terminology, making messages feel more authentic. This capability also lets scammers operate in languages they don’t speak fluently themselves.

AI further increases the scale and effectiveness of phishing campaigns by enabling rapid testing and variation. Attackers can quickly generate multiple versions of a message, measure which ones get the most engagement, and continuously refine their approach. Varying phrasing and structure also enables some of these emails to bypass traditional spam filters and phishing detection methods.

AI-powered chatbots and impersonation

Today, people use chatbots for a variety of tasks, from technical support to pizza ordering, without hesitation. Scammers exploit this familiarity to pose as customer service agents, account representatives, or other trusted figures.

AI enables these chatbots to respond dynamically and convincingly, and to craft replies tailored to the victim. This means a bot can build trust gradually, guiding a user through processes that feel routine while subtly collecting sensitive information.

For example, on LinkedIn, an AI chatbot might send a message about a potential job, making recipients more likely to enter personal details. Similarly, scams on social platforms like Instagram and Discord exploit the familiarity of these messaging platforms, where users often respond quickly without verifying the sender.

By exploiting the trust users have in chatbots and the conversational capabilities of AI, these scams can bypass normal skepticism.

AI-assisted romance scams

Online dating is commonplace, and it’s not unusual for single people to strike up conversations with someone they met through a dating site. Romance scams have existed since the early days of online dating, but AI tools are now making these scams more convincing and harder to detect.

Romance scams are generally longer-term attacks, as scammers need to spend days or weeks building trust with a potential victim before asking for money under a false but believable pretext. Scammers pose as someone who can’t meet in person, usually because they’re abroad for work or in the military.

While human-operated scams can be inconsistent over time, AI-powered scams maintain a cohesive and consistent persona. AI, for example, will “remember” details like claimed location or eye color and can ensure the story remains coherent. This helps maintain the illusion and makes it harder for victims to detect the deception.

AI can also automate the process of grooming users over time, building rapport and trust until the scam reaches its goal. It can use human tactics like behavioral mirroring in conversation, matching the victim’s emotional tone so there’s no disconnect in the conversation. This builds a stronger connection that makes it much harder for the target to decline the scammer’s eventual request for help.

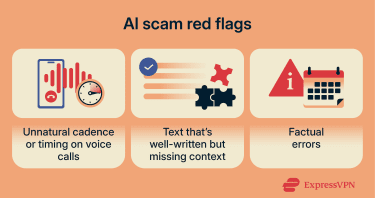

Warning signs of AI scams

While AI scams are growing increasingly difficult to detect, there are still some subtle signs that can give them away.

Slightly unnatural cadence or timing in voice calls

AI calls sound more realistic than ever, but the cadence of AI-generated speech is usually slightly off. There might be unnatural pauses, timing issues, and other indications that the person you’re speaking with might not be human. AI calls often struggle with interruptions or with rapid-fire conversations and questions, for example.

Hyper-polished but context-thin writing

AI-generated text can sound professional and polished, but it often lacks depth. For example, the text might use buzzwords and industry jargon without conveying any real meaning. AI-powered scam chatbots intentionally use vague language that can be reiterated across multiple targets. When pressed for answers, they may deflect or restate the initial claim, often trying to shift the conversation away from the question rather than providing a concrete answer.

One common example of this is in job-hunting scams, where you might receive a message that reads: “We noticed your profile on Indeed and think you’re a great match for this position. Get paid daily, earning up to $50 per hour.” It promises a lot but provides no concrete or identifying details.

Perfect grammar paired with factual errors

One warning sign to look out for is confident text that contains factual errors. AI often generates content with a tone of certainty while including incorrect details, dates, and more. An authoritative tone can discourage users from double-checking statements, and scammers exploit this, offering up false but plausible details.

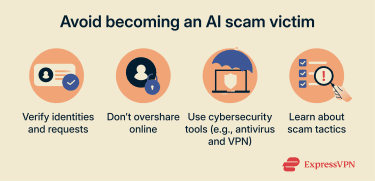

How to protect yourself from AI scams

AI scams are insidious, but there are steps you can take to protect yourself.

Verify identities and requests

When you receive an unexpected message from a company or from someone you know, make sure to verify the sender’s identity. If it’s an email, check the “From” address. While the name might reflect an official company email, the actual email address might not be from the official domain (it could just be one or two characters off, so check carefully).

That said, legitimate email accounts can also be compromised, so if the message is from a legitimate address but is unexpected, try to verify it through the official company website or via another trusted communication channel.

When a request comes through for money, no matter how urgent it might seem, take the time to double-check. Just like traditional scams, AI scams often rely on urgency to pressure you into acting without thinking.

Limit personal information online

One reason AI scams are so effective is that they’re highly personalized. By limiting the amount of information available about you on the internet, you limit what attackers have to work with.

Review your privacy settings on every social media platform you use, especially those like Facebook or LinkedIn that host sensitive personal information, and be careful about what you share in recordings and on video-based platforms like TikTok.

Use security and privacy tools

Tools that help protect against AI-powered scams include:

- Antivirus/anti-malware software: Detects malicious links, attachments, and malware that scammers might try to deliver. Many suites also include phishing protection.

- Call screening: Many phone providers screen calls, prompting the caller to state their name and purpose. Automated scam calls often fail or drop off.

- Spam filters: Email providers like Gmail automatically filter phishing and spam, while third-party apps add extra protection against suspicious messages.

- Password managers: These tools help you to create and store strong, unique passwords and auto-fill credentials, protecting against fake websites.

- Multi-factor authentication (MFA): Adds extra verification, such as codes, biometrics, or security keys, to protect accounts even if a scammer uses AI tactics to steal your password.

- Virtual private networks (VPNs): A VPN like ExpressVPN can reduce passive data collection (such as IP-based location tracking) that scammers sometimes use to add contextual realism to attacks. It doesn’t stop phishing on its own, but it can limit background exposure.

- Identity monitoring tools: Services like ExpressVPN’s Identity Defender, available on select plans for U.S. users, can notify you if your personal data appears in breaches or on the dark web.

Stay informed about emerging scam tactics

Awareness is your strongest tool against scammers. If you know what to look for, you’ll be better equipped to identify a bad actor using AI. Government agencies like the Federal Trade Commission (FTC) and the Cybersecurity and Infrastructure Security Agency (CISA) share regular updates about emerging threats.

Financial institutions also frequently provide updates, especially around scams that target banking details or credit card information. Beyond that, following reputable cybersecurity firms can keep you apprised of potential threats.

What to do if you fall for an AI scam

If you fall victim to an AI scam, don’t panic or blame yourself. As scams grow more sophisticated, they become harder to identify for even the most well-informed users.

Steps to take immediately

Once you realize you’ve fallen for a scam, there are several steps you should take.

- Cease all communication with the scammer, whether that’s through email, a messaging app, or otherwise.

- Change your passwords across all of your accounts, starting with the most important ones like email and banking, and enable MFA wherever available.

- Immediately contact your financial institutions. If you’ve issued a payment to a scammer, there’s a chance your bank or credit card provider can stop it. Otherwise, your financial institution can freeze your accounts to stop any further withdrawals.

- Download all previous communications with the scammer. Record as much evidence as possible, including all messages, recordings, images, and more. Law enforcement agencies can use this information to build a case and, with any luck, recover damages. Messages and recordings also help authorities build a clear timeline of events.

Reporting AI-based fraud

Whether you’ve fallen victim to a scam or have just been targeted, you can file a report with the FTC at ReportFraud.ftc.gov. Cybercrime and AI-specific scams can also be reported to the FBI’s Internet Crime Complaint Center (IC3) at ic3.gov.

Reducing future risk

After you’ve taken care of the immediate tasks, take some time to review what happened. Pinpointing exactly what it was about the scam that drew you in can help you avoid similar incidents in the future.

You should also take the time to strengthen your overall cybersecurity posture. Make sure to set up alerts for any unusual logins or transactions on your accounts, and use reputable security tools to maximize your protection against scams and malicious sites going forward.

FAQ: Common questions about AI scams

Are AI scams illegal?

Scams in general are illegal, and that includes AI scams. In 2024, the Federal Trade Commission (FTC) stated that “using AI tools to trick, mislead, or defraud people is illegal.”

Are deepfake scams easy to detect?

Deepfakes are becoming increasingly difficult to detect. Previously, there were certain giveaways, like unnatural blinking or odd hand movements, but as AI becomes more sophisticated, its creations become harder to identify.

Can AI scams target businesses as well as individuals?

Yes, AI scams can target anyone, individuals and businesses alike. Companies of all sizes can be targeted with AI-generated phishing emails, deepfake calls, or other automated attacks designed to steal sensitive information or money.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN